As I discussed in an earlier blog post, AI technologies show promise for improving productivity, but they can also be utilized by bad actors. A frequently-cited example is the story of a couple who were scammed by a criminal using voice-cloning technology to impersonate a family member in distress. The couple received a phone call from what seemed to be the husband’s mother, panicked and crying, before a man came on the line and told them to transfer first $500 and then another $250 to ensure her safety. After the couple paid, they called the supposed victim and found she had been asleep at home the whole time.

This scam is an example of a new form of voice phishing (vishing) which utilizes an AI-replicated voice of a person known to the target — a loved one, a friend, a coworker, or a boss — to bypass the target’s wariness of strangers. By tricking the target into believing someone they trust needs their help, the attacker can exploit the target’s altruism and take advantage of them to get money, information, or credentials.

The story of this couple isn’t the only well-publicized instance a vishing attack:

- A couple in Canada were scammed out of $21,000 by an attacker impersonating their son.

- A company lost the equivalent of $25.6 million after employees were fooled by a deepfake of the company’s CFO in a video conference call.

- LastPass — a password management service company — announced in April, 2024 that one of their employees was targeted by a scammer using a deepfake of their CEO’s voice.

- Zscaler Research reports that 2023 saw a 60% increase in AI-driven phishing attacks.

- The Anti-Phishing Working Group reports that vishing attacks are increasing every quarter; they cite OpSec as saying that Q3 2023 had 260% more vishing attacks than Q4 2022.

How does AI vishing work?

The tools required to perform an AI vishing attack are readily available and make performing such an attack simple. Voice cloning is both inexpensive and easily deployable. CBS reported that for $5 and a 30-second audio clip of a voice to replicate, they were able to use a web-based voice cloning service to get that voice to say anything they typed. Such voice-cloning services are readily available and present themselves as legitimate businesses. When paired with any one of a number of voice transcription services and a chat service that can be told to present itself as a real person, an attacker can cheaply and easily impersonate anyone who’s ever spoken aloud in a video posted on the Internet.

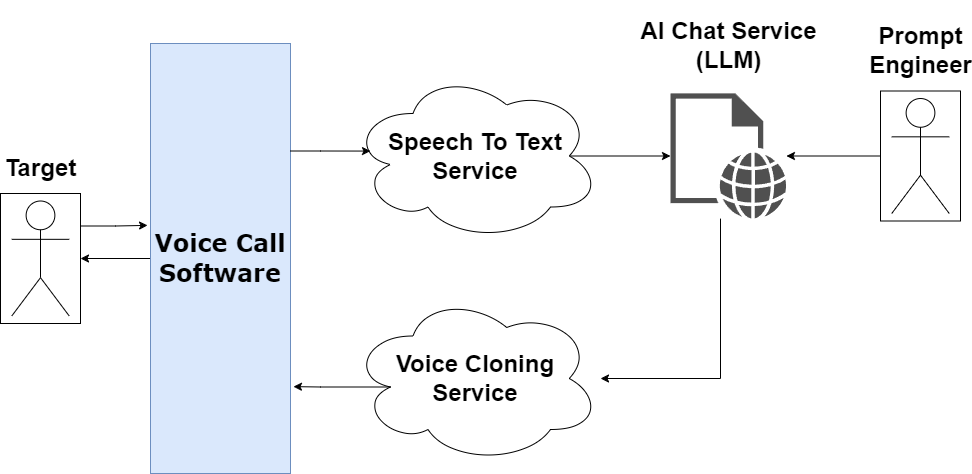

These attacks can be performed manually with pre-generated audio, but it’s also possible to orchestrate these services together into a system that could allow the deepfake to converse with the target, enhancing the illusion.

In the above system, incoming audio from the target is passed through speech-to-text transliteration software and into an LLM-driven chatbot, which has been primed by a prompt engineer to play the role of someone the target trusts. The output of the chatbot is run through a voice cloning service to emulate the voice of the role the chatbot is playing, and the resulting audio is played back to the target. In a successful attack, the target believes they’re speaking to the person being emulated and provides sensitive information or agrees to send money.

What steps can I take to help protect myself?

There are a few simple steps that you can take to help protect yourself from this type of attack:

- The Electronic Frontier Foundation recommends creating a shared, verbal family password to verify a family member is who they say they are.

- The FCC has multiple recommendations, including:

- Don’t accept calls from unknown numbers.

- Never give out personally identifying information in response to unexpected calls.

- Be cautious if the caller requests information or money immediately.

- If the caller claims to be a family member or friend in need, reach out to that person directly to confirm they need help.

- If the caller claims to be from a company or government agency, hang up and call the company or agency using an official number.

- Don’t respond to any questions, especially those that can be answered with “Yes.” (Inc. reports that even a short answer can be used to clone your voice.)

- File a complaint with the FCC if you believe you’ve received an illegal call/text or if you think you’ve been scammed.

- In the event that you’ve fallen victim to an attack, Cisco recommends that you:

- Alert your financial institutions of the possibility of fraudulent activity.

- Change all potentially compromised passwords and other credentials.

- Notify the organization the scammer claimed to represent.

- File a complaint with the FTC and/or the FBI’s Internet Crime Complaint Center.

- Immediately inform your company’s IT department or cybersecurity team if you’re an employee that disclosed sensitive corporate information.

- Educate your employees about cybersecurity threats.

If your company is concerned about vishing or other advanced threats, we can help. Tangible Security offers a full stack of security services, including employee security awareness training to combat sophisticated social attacks, and security assessments. Contact us today.

Recent Comments